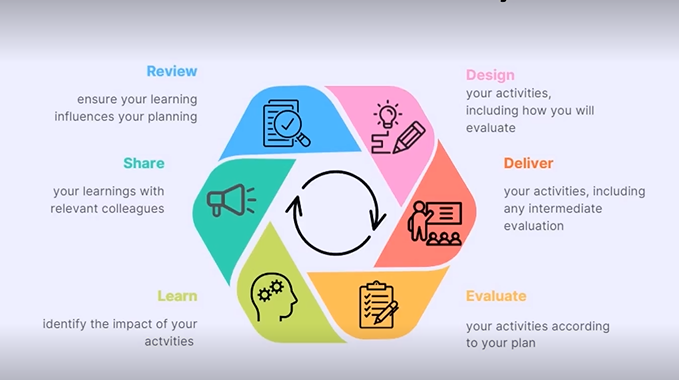

Evaluation Cycle Framework

The Evaluation Cycle Framework, developed by the University's Access and Success team, aims to guide you in effectively evaluating the impact of your project or programme. By using this Framework you help to:

- Ensure all programmes are evaluated consistently and robustly using appropriate frameworks and tools

- Provide consensus on programme design

- Help to frame delivery of activities into outcomes achievement

- Support the University's principles for transparent evaluation design, useful for all types of projects

Design: Develop your Theory of Change

Theory of Change is a framework used to describe why an activity, project or initiative is needed, plan how it is going to be implemented and the expected impact and outcome. Theory of Change helps you to map out what you need to do, what you hope to achieve and why, and what you need to consider to evaluate the effectiveness of your activity, project or initiative.

This guide provides an introduction to the Theory of Change methodology to help you structure and plan your evaluation.

- About Theory of Change resource (opens in new window)

- Theory of Change template (opens in new window)

External resources

- The Open University: Theory of Change course (opens in new window)

Design, deliver and evaluate: Developing your evaluation

To help you plan your evaluation, take the outcomes agreed in your Theory of Change and add them to the evaluation plan template. The template will prompt you to think about the evidence or data you may need to collect and when and how you'll need to collect it to run your evaluation effectively.

- Evaluation plan template (opens in new window)

The things you need to consider when planning your evaluation will be determined by the size and scale of the activity which you are evaluating. To help you think about this consider whether you are running:

- a smaller scale project locally for example, a particular course unit or programme (level 1)

- a medium scale activity or initiative for example something division/department or school wide (level 2)

- or is what you're panning to evaluate a large scale project or activity for example, a faculty or university-wide project or initiative (level 3).

For all projects, activities and initiatives, no matter how big in size and scale, you should consider data collection, Student Voice, and the resources available to you.

Data collection

If your project or activity will primarily impact your local level, small scale (level 1), for example, a particular team, course or programme, you will normally be responsible for your own data collection and will require smaller levels of consultation. At this level, consider collecting data through channels that are already accessible to you for example, course unit evaluation data, quick in-course evaluations, consultation with student representatives, or other existing channels such as discussion boards or emails.

If your evaluation is of a medium or large scale activity (level 2 or 3), for example something that spans the department/division or school, you may begin to use data available from other sources such as University dashboards or PowerBI reports. For example, department/division or school data on engagement and progression, or university data on access and attainment. You may also require further consultation with others to gather sufficient, quality data. It is also advisable to consider what other projects, activities or initiatives are already happening. This can help to avoid duplication for example, sharing data across projects, avoiding survey fatigue, and reducing potential attrition.

If the project, activity or initiative you are evaluating is particularly large (level 3), it is likely that it is of high-relevance to key University strategic priorities for example, the Access and Participation Plan, or may be related to wider change. At this level, you may consider having workstreams or task and finish groups to manage the delivery and evaluation.

Student Voice

How you are going to incorporate the Student Voice in your evaluation should be a key consideration for a project, activity or initiative of any size and scale. The way in which you incorporate the Student Voice will differ depending on the resource you have available to run your evaluation.

For small scale evaluations (level 1), consider incorporating the Student Voice through channels that are already accessible to you for example, course unit evaluation data, consultation with student representatives, discussion boards, in-course feedback and evaluation. Using existing sources or creative evaluation methods (such as pre- and post- in course activities) can help to avoid survey fatigue.

For medium scale evaluations (level 2), consider liaising with teams such as the Student Communications team to seek input from the University's Student Perspectives group, or tap into existing data such as that available from the Student's Union Research and Insight team (as well as other existing sources) to incorporate the Student Voice into your evaluation.

For large scale evaluations (level 3), as well as the methods you might consider at level 1 and level 2, you can also consider speaking with the Student Partnership team to employ a Student Partner to co-create and co-deliver your activity and evaluation with you.

Further advice, guidance and examples, to help you embed the Student Voice into your evaluations is available in the Students as Evaluative Partners section of this toolkit.

Funding and resources

For small scale activities (level 1), it is likely that your evaluation is embedded into your professional practice and therefore no additional funding or resource is required.

For medium scale projects, activities or initiatives (level 2), you may need (or have access to) some funding and additional resource to help you conduct your evaluation effectively. For example, if you are planning to run student focus groups it is likely you will need to reimburse students for their time via Study Participation Vouchers. You may find it useful to look for sources of funding to apply to when planning your evaluation, for example the University's Innovation and Scholarship fund.

For medium and large scale evaluations (level 2 and level 3), your evaluation may involve other teams from across your division/department, school, faculty or University. It's important to identify what you will need and from what teams as early as possible so that you can communicate this effectively with them. Consider approaching these teams as early as possible (or involving them in the planning your evaluation) to secure their support and ensure you are able to access the information you need.

This guide builds upon the concept of Theory of Change and explains how to select an appropriate form of evaluation and develop an evaluation plan. It also introduces the purpose of comparison and why it is used to produce higher standards of evidence.

- How to guide: Developing your evaluation (opens in new window)

Deliver, evaluate and learn: Monitoring and reviewing your evaluation

This guide explains how to use evidence gathered by evaluation to inform and review your work and helps you to locate support and resources to monitor and review your evaluation.

- Monitoring and running your evaluation guide (opens in new window)

You may find this evaluation checklist spreadsheet template useful in helping you to run the evaluation.

- Evaluation checklist template (opens in new window)

Share: Disseminate your findings

Once your project, activity or initiative has concluded and you have completed your evaluation, consider how you are going to share your findings and experiences.

For small, scale projects which impact your local area (level 1), it is likely that you will primarily share and disseminate the results of your evaluation through local and internal channels for example, division/department communications, relevant SharePoint sites, or at team meetings.

For medium scale activities (level 2), sharing and disseminating your findings may happen locally (within you department/division or school), across the University more broadly (for example, via the University's annual Access and Success Impact Report) or externally (such as via conferences, formal publications or blogs, or other approaches and collaborations).

For large scale initiatives (level 3) such as those that are faculty or university wide, dissemination should be broad and likely beyond the scope of the institution. For example, you may wish to publish your results in a blog or paper, share with key external stakeholders, or present at conferences.

Important: If you would like to publish your evidence externally for example, in peer-reviewed journals, it is vital that you apply for ethical approval prior to collecting your data. You should factor this in to your project plan and evaluation as this can often have a long-lead in time.

The University's Scholarship toolkit provides further information, advice and guidance about sharing and disseminating your findings and applying for ethical approval.

This guide introduces the ways in which you and present and disseminate your evaluation project, internally and externally.

- How to guide: Disseminating your evaluation (opens in new window)

Review: Use your learning to inform practice

Finally, once you have finished your evaluation, it is important to take time to reflect on your learning and findings as this will help you to inform your future practice and to make enhancements to your teaching.

Case studies

These real case study examples show different approaches to evaluation teaching and learning practice across projects and activities of different size and scale. If you would like to add a case study, please contact the Teaching Excellence team at teaching.learning@manchester.ac.uk.

Evaluating Your Practice: An Online Event for University Staff

Evaluating Your Practice: An Online Event for University Staff, 20 January 2025

The Evaluating your Practice event brought staff from across the University together the explore different evaluation strategies to enhance professional and teaching practice across three themes:

- Innovation in education

- Equality of opportunity

- Evaluation skills development

| Innovation in Education | Equality of Opportunities |

|---|---|

|

Evaluating practice to create TEF case studies Jen McBride will lead this session on the importance of evaluation as part of the University's TEF plans. |

Operationalising the Access and Participation Plan Sophie Flieshman and Jack Walker introduce the APP and plans to embed it across the University. |

|

Evaluation & Personal Development James Brooks explores the approaches for personal development and evaluation. |

APP Case study Access and Success team will bring the Access and Participation Plan to life through a case study. |

|

Evaluation Lessons Learnt (so far) This session will explore lessons learnt from three projects that have designed evaluation at their inceptions. You'll hear from Alan Davies & Fran Hooley (FBMH), Charles Walkden & Fiona Lynch (FSE), and Gary Vear (FHUM). |

Understanding Type 3 evaluation Access and Success team introduce Type 3 evaluations through examples, guidance and resources you can use. |

Case study: Evaluating the PgCert in Clinical Data Science (level 1)

The PgCert in Clinical Data Science is a co-created course developed in partnership with public patient representatives, consultation with The Christie and NHS England. The course is aligned to NHS values, aiming to upskill the healthcare workforce with data science, statistics, machine learning capabilities and change management knowledge and skills.

Developing a Theory of Change

First, the course team developed a Theory of Change to plan the evaluation and determine what the short, medium and long term outcomes and impact of the course should be. Developing the Theory of Change enabled the team to agree seven evaluation themes and research questions:

- Knowledge, confidence and skills: Has the course helped develop foundational clinical data science knowledge and skills?

- Authentic and experiential: Did the learners find the case studies / exemplars / notebooks provided real world context and opportunity to get hands-on experience applying some of the tech concepts covered?

- Personalised and flexible: Had it been personalised and flexible enough to meet the needs of all students?

- Interdisciplinarity: Did the group work or face to face day give a taste of interdisciplinary / team science working?

- Self-efficacy and agency: Has the PG Cert encouraged all students to exercise agency and develop self-regulated learning skills?

- Satisfaction: Did learners enjoy the course to a level they would recommend it?

- Diverse and inclusive: Was the cohort a broad mix of learners from across professional disciplines and levels?

By phasing the outcomes, it was easier to see how each element contributed to the final impact which was to enable all learners to embark on a digital transformation project of their own. Next, the team used the Evaluation Cycle Framework to embed the evaluation into the course.

Using the Evaluation Cycle Framework

| Stage | Actions |

|---|---|

| Design |

Resources to help you develop an evaluation plan |

| Deliver |

Resources to help you develop an evaluation checklist |

| Evaluate |

|

| Learn |

|

| Share |

|

| Review |

|

Case study: Embedding evaluation into learning design (level 1)

At the Evaluating your Practice event (January 2025), Tamara Montrose (Transnational Education), Diane Bennet (Transnational Education) and Fran Hooley (Senior Lecturer in Digital Health Education) shared their experiences of embedding evaluation into learning learning design.