Evaluation methods

There are many different methods and tools you can use to collect data for your evaluation. The methods and tools you use will depend on a range of factors such as:

- the size and scale of your evaluation

- what you are trying to evaluate

- the design of your evaluation

- the skills and knowledge available to you to gather different types of evidence

TASO (Transforming Access and Student Outcomes) highlights three, broad types of evidence:

- Narrative: Clear narrative an activity is effective

- Empirical: Tangible data an activity is associated with better outcomes for students

- Causal: Directly attributable “causal impact” on outcomes for students

It's important to remember that there is no hierarchy of evidence; all evidence is equally valuable and forms part of a bigger picture.

Evidence of impact

Austen and Jones-Devitt (2024) highlighted ten types of evidence you may consider to help demonstrate your impact or the impact of an intervention or activity:

- Evidence of difference: Evidence to show your intervention has made a difference e.g., increased student satisfaction

- Evidence of scale: Evidence to show your impact is on a significant or sizeable scale e.g., increased percentage of first-time passes

- Evidence of attribution: Evidence that helps to elaborate the intricate or complex links between the intervention and impact e.g., enhanced student engagement

- Evidence of quality: Evidence to show that you have achieved impact through a high-quality intervention e.g., improved student outcomes

- Evidence of partnership: Evidence to show how partnerships contributed to impact e.g., expanded reach

- Evidence of engagement: Evidence to show that engagement of stakeholders, practitioners or public is integral to the intervention e.g., students as partners and co-creators

- Evidence of experience: Evidence to show that individuals involved have a strong personal track record in the intervention area e.g., longevity, testimonials

- Corroborative evidence: Evidence your intervention to corroborate the impact e.g., student surveys, course unit evaluations

- Evidence of accessibility: Evidence to show that you have made information about your intervention accessible e.g., enhanced engagement, increased number of hits

- Evidence of recognition: Evidence to show that sector users and other audiences recognise and value your intervention e.g., awards, keynote presentations and invitations, testimonials from external partners

Creative methods of evaluation

Using creative methods of evaluation has several benefits and can be particularly useful in an environment where staff and students are time poor and experiencing survey fatigue.

Creative methods of evaluation can help ensure that there is effective representation of student voice, that approaches to evaluation are participatory and collaborative and that evaluative practice is embedded in your day-to-day practice.

Some examples of creative evaluation methods include:

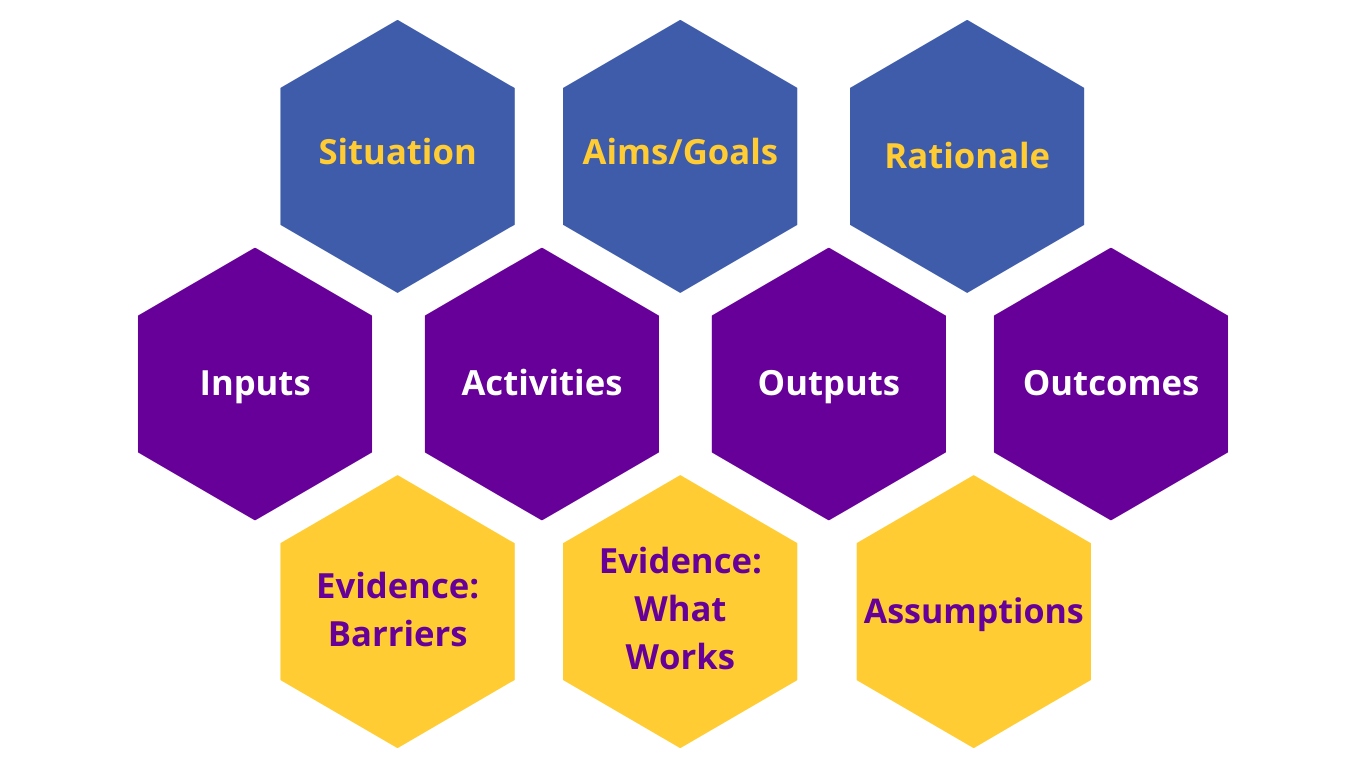

Theory of Change

Theory of Change helps you to develop a roadmap that clearly explains and shows why a specific change is likely to occur in a certain situation. It focuses in on detailing or bridging the gap, often referred to as the "missing middle," between the actions taken and the result in achieving the desired goals.

Theory of Change provides a systematic way of understanding an intervention's or activity's contribution to potential outcomes and impacts.

Resources

Contribution analysis

.png)

Contribution analysis is an evaluation methods that can be useful when outcomes of an activity or event, have been particularly successful or unsuccessful. It aims to capture learning from these activities to inform effective evaluations and planning in future.

Contribution analysis provides a systematic way of understanding an intervention's contribution to observed outcomes or impacts.

Resources

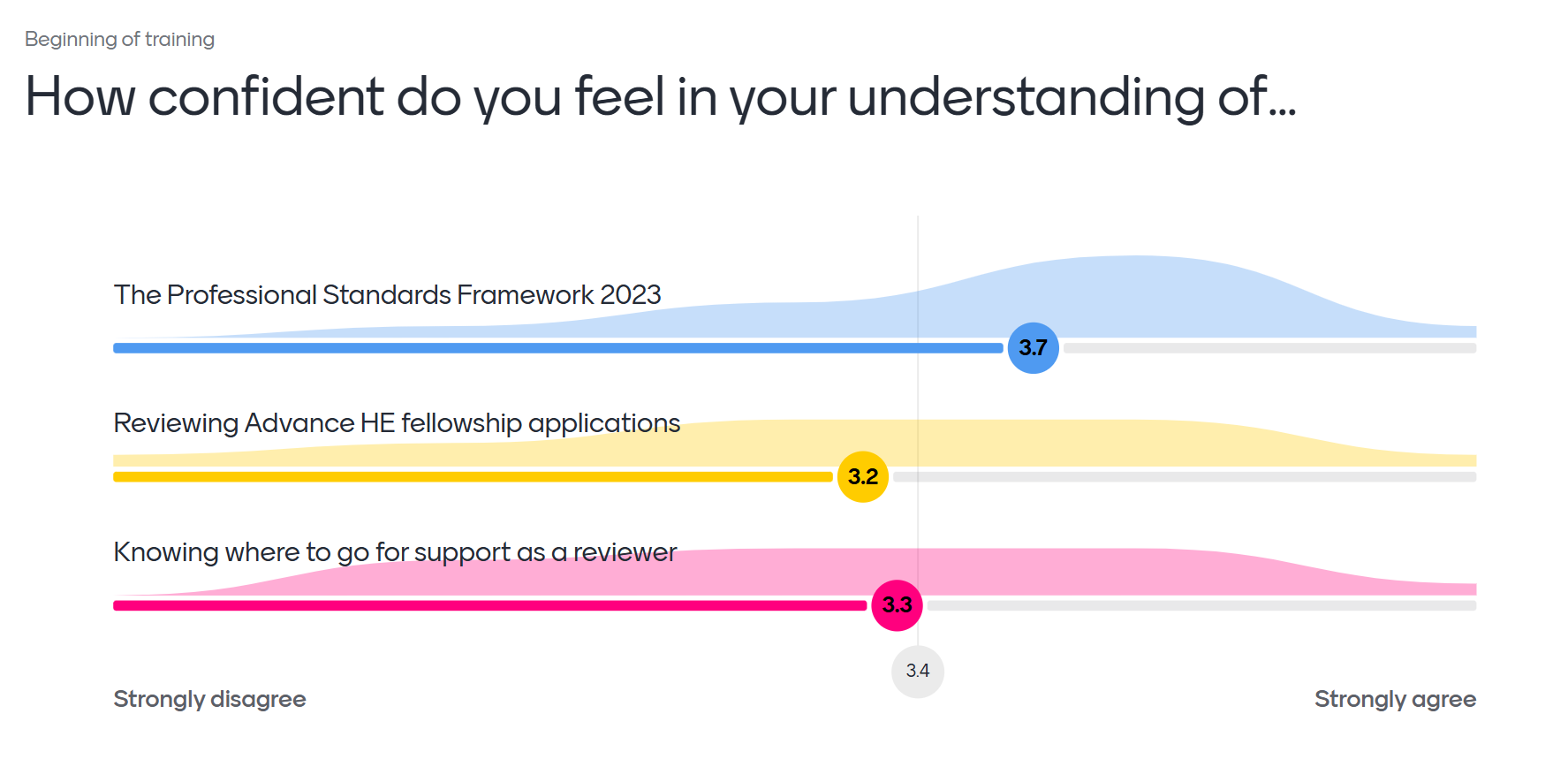

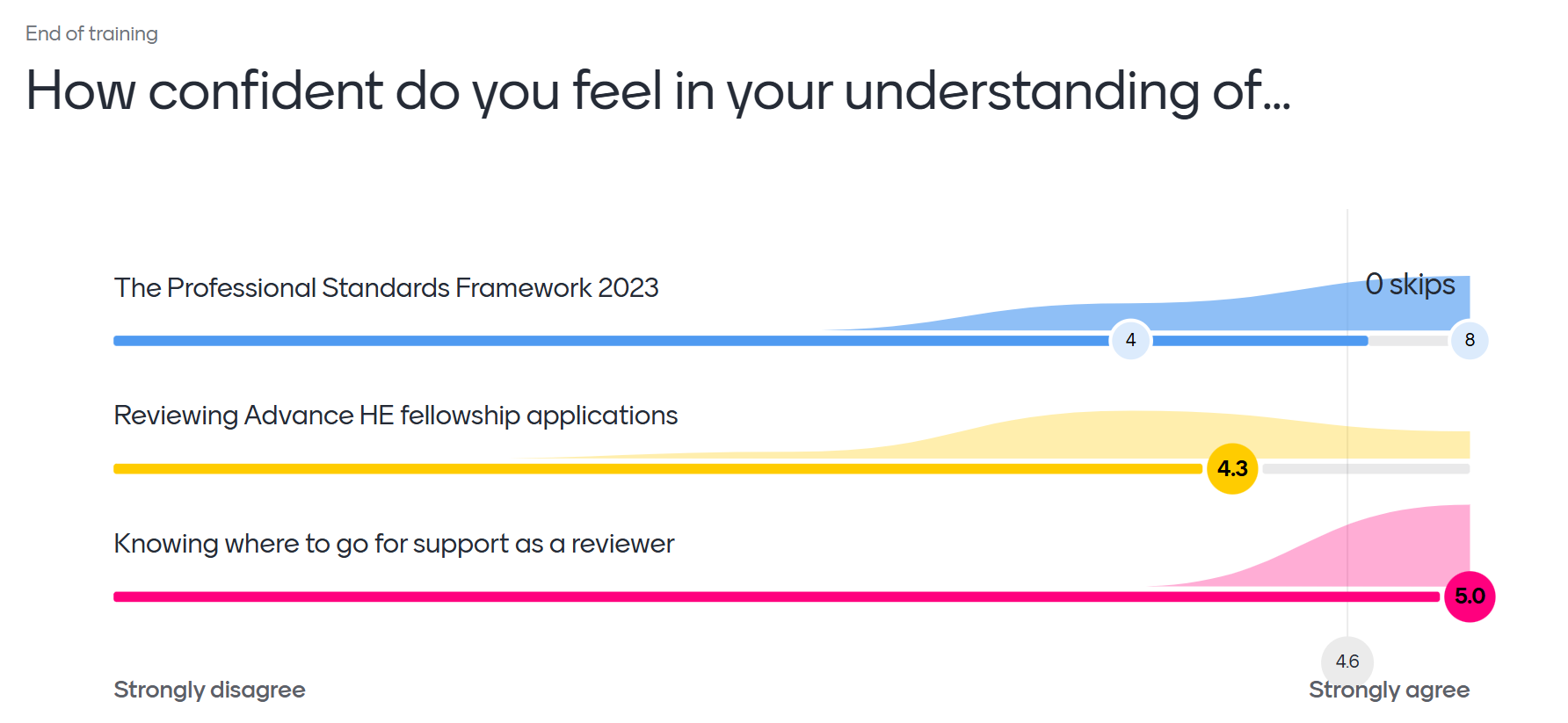

Pre and post test

A pre and post test is a useful tool to help you compare the effectiveness of an activity immediately or shortly after. This can also be useful in helping you to flex the learning journey in response to learner needs.

A pre and post test provides a way of understanding difference before and after an intervention.

Digital storytelling

Digital storytelling is a qualitative evaluation approach inspired by the Most Significant Change (MSC) technique. In digital storytelling, you collect stories from people about their experience and bring people together to discuss the stories and what can be learned from them.

When using digital storytelling to gather data, you will need to:

- Recruit and train collectors

- Identify and brief storytellers

- Facilitate the storytellers and collectors to have a conversation (ensuring the conversation is recorded)

- Run a story discussion session to ensure the stories and learning is shared

Whilst this approach can be resource intensive and may require additional funding to reimburse storytellers and collectors, the biggest benefit is that digital storytelling centres the experiences of those most involved and enables the collection of meaningful, rich data. The process is collaborative, enjoyable, and innovative. It is also important to be mindful of the power dynamic involved between the storytellers and collectors (e.g., if staff and students).

Resources

- Better Evaluation: Most Significant Change approach

- STEER Blog Digital Storytelling Transitions from Study into Employment: Experiences of Graduate Interns 2021-2022

Austen, L., Pickering, N. and Judge, M. (2021). 'Student reflections on the pedagogy of transitions into higher education, through digital storytelling'. Journal of Further and Higher Education, 45(3), pp.337-348. https://doi.org/10.1080/0309877X.2020.1762171

Reflective journals

Using reflective journals is a useful methods to help you evaluate small cohorts. Reflective journals enable you to delve deeper into why a course works and can produce rich data on how the course has run over time.

To use reflective journals effectively, you should include the journal activity as part of the course design. This will help you to look at engagement and motivation and gather short and medium term reflections.

Resources

Appreciative inquiry

Appreciative inquiry enables you to look at what works rather than what hasn't worked - it enables you to evaluate through a positive lens.

Resources

- Better Evaluation: Appreciative inquiry (opens in new window)

- Evaluation Collective: Wicked Evaluation Problems - Using Appreciate Inquiry to embed evaluation (opens in new window)

- [Accessible format] Evaluation Collective: Wicked Evaluation Problems - Using Appreciate Inquiry to embed evaluation (opens in new window)

After Action Review (AAR)

After Action Review is a useful approach when outcomes of an activity or event have been particularly successful or unsuccessful. This approach aims to capture learning from the activity or event to inform future planning and successes.

When undertaking an After Action Review, all those involved in the activity or event has a role to play. To ensure you get the best data, it's important that everyone feels they can equally contribute safely and without fear of blame. Key questions you may consider when conducting an After Action Review include:

- What was supposed to happen?

- What did happen?

- What caused the difference?

- What was the learning for next time?

Resources

- Better Evaluation: After Action Review (opens in new window)

- NHS Learning Handbook: After Action Review (opens in new window)

For further information about creative evaluation methods you can watch a recording of the 'Creative Evaluation Methods and Tools' session delivered as part of the University's 'Evaluating your Practice' event (January 2025). In this session, Fran Hooley highlights some key tools, methods and approaches to help you evaluate your practice.

What if my evidence feels intangible?

When evaluating your practice occasionally your evidence can feel intangible or not fully reflected in the quantitative data. If you feel like your evidence is intangible there are things you can do. This Wicked Evaluation Problem zine from the Evaluation Collective explores what you can do and possible next steps in this situation using an example.

- Evaluation Collective: Wicked Evaluation Problems - Power and Value (and intangible evidence) (opens in new window)

- [Accessible format] Evaluation Collective: Wicked Evaluation Problems - Power and Value (and intangible evidence) (opens in new window)

Further resources

University resources

- Padlet: UoM Creative methods and tools (opens in new window) - this Padlet collates methods, tools and approaches sourced from colleagues across the University including practical tips and examples. Please add your suggestions, advice or examples.

- Frequently asked questions: Monitoring and evaluation at the University of Manchester by the Access and Success team (opens in new window - link to Access and Success team SharePoint site)

Sector resources

- Evaluation Collective (opens in new window) - a cross-sector group of evaluation advocates working to enhance student outcomes in HE.

- Better Evaluation - includes database of evaluation methods, tools and approaches (opens in new window) - Better Evaluation shares knowledge, guidance and tips from the Global Evaluation Initiative, a global coalition of organisations and experts working to support better monitoring, evaluation, and evidenced-informed decision making.

- Evaluation 4 All - includes the Universal Evaluation Framework (opens in new window) - To access Evaluation 4 All, please register for an account and use the University of Manchester's registration code, 109013. Evaluation 4 All and the Universal Evaluation Framework provides a step-by-step guide for those new and experienced in evaluation.

- Transforming Access and Student Outcomes in Higher Education (TASO) (opens in new window) - an independent hub for the HE sector which aims to close equality gaps in HE by driving the use of evidence-informed practice.

- QAA: Staff guide to using evidence (opens in new window) - this practical guide is designed to support HE professional across a range of evaluation activity from responding to student feedback to developing and evaluating strategy.

Recommended reading

- Parsons, D. (2017). Demystifying evaluation : practical approaches for researchers and users / David Parsons. Bristol: Policy Press. [online]. (opens in new window)

- Christie, C.A. and Alkin, M.C. (2008). Evaluation theory tree re-examined. Studies in educational evaluation, 34(3), pp.131–135. (opens in new window)

- Lemire, S. (2024). The Evaluation Metro Map. Journal of multidisciplinary evaluation, 20(48), pp.35–40. (opens in new window)